I’ve been using VisualVM on and off with modest success since I first found out about it at JavaOne 2008. In addition, since JDK 6 update 7, it’s been included as part of the standard JDK installation (released in July of 2008). I was quite happy when it helped me identify bottlenecks and cut down the time to run a suite of unit tests by 50% (from 2 minutes to 1 minute). After all, the less time it takes to run unit tests, the more often they’ll get run. However, my biggest success using VisualVM came in early April, when I was asked to figure out why a use case was exhibiting disturbing performance characteristics.

When I first walked through the use case, it was taking about 10 seconds to run with 50 items. The time appeared to be proportional to the number of items or perhaps even worse. This was a scary prospect given that there would often be more than 10,000 items in a real production setting and that the use case was intended to be interactive (as opposed to a batch or background task). A careful code review might have revealed the problem, but I knew it would be far more efficient to profile the code as a way to identify hot spots. I started up VisualVM, connected to the relevant JVM, turned on CPU profiling, and collected data for the use case. Sadly, I seem to have lost the relevant snapshot, so you’ll have to trust me when I tell you that the method SqlQueryFile.isSupported() was being called over 36 million times! I made some minor tweaks to the method itself which improved its performance by about 10 percent – okay, but not nearly enough. I next identified what was effectively a loop invariant. The isSupported() method was being called repeatedly with the same query and schema. As you can see from the snippets below, I pulled that check out of the nested loop.

Before:

for(Platform platform=p; platform != null; platform=platform.getParent()) {

for(SqlQuery q : metricQueries) {

if (isSupported(q, datasourceSchema)) {

if(q.getMetricPath().equalsIgnoreCase(metricPath) &&

q.getPlatform().equalsIgnoreCase(platform.getName())) {

if(ret==null) {

ret=q;

} else {

if(isMoreRecentThan(q, ret)) {

ret=q;

}

}

}

}

}

}

After:

Collection supportedQueries = new ArrayList();

for (SqlQuery q : metricQueries) {

if (isSupported(q, datasourceSchema) && q.getMetricPath().equalsIgnoreCase(metricPath)) {

supportedQueries.add(q);

}

}

// need to optimized this code for better performance

for (Platform platform = p; platform != null; platform = platform.getParent()) {

for (SqlQuery q : supportedQueries) {

if (q.getPlatform().equalsIgnoreCase(platform.getName()) &&

(ret == null || isMoreRecentThan(q, ret))) {

ret = q;

}

}

}

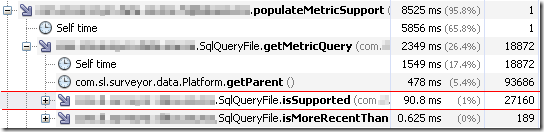

When I ran the profiler again, it turned out I'd vastly reduced the number of calls to (~36 million to ~9 million) and the time spent in (80+% to 26.3%) the isSupported() method, as you can see in the picture below:

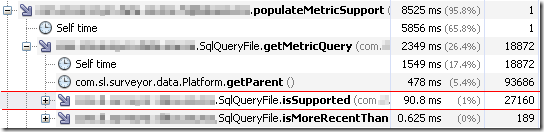

I probably could have left it there, but after taking another quick look, I noticed a very simple way to save even more time. I could flip the order of the conditional expression in the first if statement. That way, the cheap operation (which usually returns false) would come first and frequently allow us to skip the evaluation of isSupported().

if (q.getMetricPath().equalsIgnoreCase(metricPath) && isSupported(q, datasourceSchema)) {

To my surprise, this cut the number of calls to around 27,000 and the time spent in the method to 1%!

With these optimizations in place, the same use case with 11,000 items now took less than a second. Thanks VisualVM!

Update: This post was a second place winner in the Java VisualVM Blogging Contest!